We all love Dapper micro-ORM.

Many developers have realized that although Entity Framework offers some features that Dapper doesn’t, Dapper is still more flexible (and much much faster) than EF. And many have realized that the right tool for the right job sometimes involving using both EF for some things and Dapper for others. And one of the usages where Dapper is the king is for building dynamic queries.

Dynamic SQL

Old-school developers (those who have been coding since the late 90s or early 2000s, probably in VB6, ASP3, or PHP) will probably remember code like this:

string sql = "SELECT * FROM [Product] WHERE 1=1";

if (!string.IsNullOrEmpty(Request["ProductName"]))

sql += " AND Name LIKE '" + Request["ProductName"].Replace("'", "''") + "'"; // this replace!

if (!string.IsNullOrEmpty(Request["SubCategoryId"]))

sql += " AND ProductSubcategoryID = " + Request["SubCategoryId"].Replace("'", "''"); // this replace!

// here is where you pray that you've correctly sanitized inputs against sql-injection

var products = cn.Query<Product>(sql);

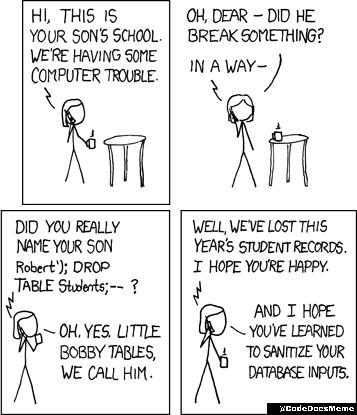

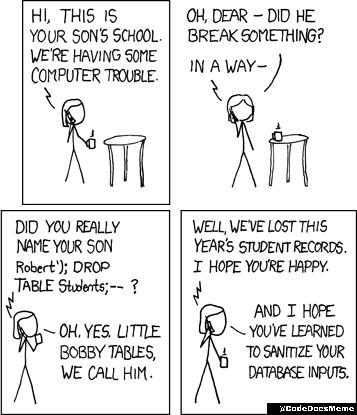

Basically we were all sending dynamic SQL statements directly to our databases. And since user-input can be malicious (see below), we had to manually sanitize user-input to avoid SQL-Injection attacks.

Hello Bobby Tables

If you weren’t sanitizing your input correctly, you were at serious risk. SQL-injection is probably the most popular web-hacking technique. If you forgot to sanitize something like an “id” querystring, you could be opening a huge vulnerability and possibly exposing all your database, not only for reading but also for modifications.

Parameterized SQL

Even if you’re old school (hey VB6 guy, I’m looking at you again) you probably know that Dynamically building SQL like that is not only error-prone (vulnerable to SQL-injection) but also does not benefit from SQL Server Cached Execution Plans. You probably used some kind of code-generation tool which helped you to build code like this (maybe to invoke a stored procedure, since 3-tier-architecture and “Windows DNA” were in the hype in the early 2000s):

Dim strSQL As String

Dim cmd As New ADODB.Command

strSQL = "UPDATE MyTable SET " & vbNewLine _

& " NEEDS_ID = @NEEDS_ID, " & vbNewLine _

& " OBJ_ID = @OBJ_ID, " & vbNewLine _

& " OBJ_COMMENTS = @OBJ_COMMENTS, " & vbNewLine _

& " TIME21_ID = @TIME21_ID, " & vbNewLine _

& " WHERE ID = @WHEREID"

With cmd

.ActiveConnection = Cn

.CommandText = strSQL

.Parameters.Append .CreateParameter("@NEEDS_ID", adInteger, adParamInput, 2, 12)

.Parameters.Append .CreateParameter("@OBJ_ID", adInteger, adParamInput, 2, 23)

.Parameters.Append .CreateParameter("@OBJ_COMMENTS", adVarChar, adParamInput, 250, "Some text")

.Parameters.Append .CreateParameter("@TIME21_ID", adInteger, adParamInput, 2, 34)

.Parameters.Append .CreateParameter("@ID", adInteger, adParamInput, 18, 456)

.Execute

End With

I hope the millennial developers are not so surprised that parameterized SQL already existed in the past century.

Back to modern software

Time flew by, Java and .NET emerged (and maybe submerged a little?), Reflection, Bytecode emission, Full-ORMs, Micro-ORMs, 3-tier was deprecated in favor of dozens of modern architectures, and now database access is much easier, right?

Now we don’t have to manually describe each one of our parameters to SQL. Our favorite ORMs will do that for us.

Dapper Example:

var dynamicParams = new DynamicParameters();

string sql = "SELECT * FROM [Product] WHERE 1=1";

if (productName != null)

{

sql += " AND Name LIKE @productName";

dynamicParams.Add("productName", productName);

}

if (subCategoryId != null)

{

sql += " AND ProductSubcategoryID = @subCategoryId";

dynamicParams.Add("subCategoryId", subCategoryId);

}

var products = cn.Query<Product>(sql, dynamicParams);

Don’t Repeat Yourself

The example below makes me a little upset for 2 reasons:

- I have to pass productName twice, both to the

sql string and to the dynamicParams dictionary.

Their names should match.

- I have to keep those two variables (the SQL statement and the list of parameters) separated, even though they are closely related to each other.

String Interpolation Internals

String Interpolation was introduced in C# in 2016.

Instead of doing code like this:

string name = "Rick";

int accesses = 10;

string output = string.Format("{0}, you've been here {1:n0} times.",

name, accesses);

You could do like this:

string name = "Rick";

int accesses = 10;

string output = $"{name}, you've been here {accesses:n0} times.";

Internally, when you write an interpolated string (starting with $), the compiler generates a FormattableString class, which contains both the template (as if it was {0}, you've been here {1:n0} times.), and also the list of parameters (string name and int accesses).

If your method expects a regular string, the FormattableString will be implicitly converted into a regular string, and you get the same behavior as if you just passed a string.format to your method. However, if your method expects a FormattableString class, then you have access to format and arguments isolated from each other.

FormattableString can be useful for example if we wanted to build parameterized SQL statements while letting users build their strings as if it was just regular string concatenation:

QueryBuilder ParseSQL(FormattableString query)

{

QueryBuilder myWrapper = new QueryBuilder();

string dapperSql = query.Format;

// replace {0} by "@p0",

// replace {1} by "@p1", etc..

myWrapper.SQL = dapperSql;

var dapperArgs = new Dapper.DynamicParameters();

var args = query.GetArguments();

// dapperArgs.Add("p0", args[0]);

// dapperArgs.Add("p1", args[1]); ...

myWrapper.Arguments = dapperArgs;

return myWrapper;

// now anyone can use Dapper like

// var pocos = connection.Query<POCO>(myWrapper.SQL, myWrapper.Parameters);

}

DapperQueryBuilder

Based on the idea above, I’ve created DapperQueryBuilder - which is a simple wrapper around Dapper which allows us to pass SQL parameters using string interpolation.

You can code like this:

var query = cn.QueryBuilder($"SELECT * FROM [Product] WHERE 1=1");

if (productName != null)

query.Append($"AND Name LIKE {productName}");

if (subCategoryId != null)

query.Append($"AND ProductSubcategoryID = {subCategoryId}");

var products = query.Query<Product>();

Although it looks like you’re just building a dynamic SQL (with values as inline literals), actually what you get is parameterized SQL.

In case, query will have this underlying statement: SELECT * FROM [Product] WHERE 1=1 AND Name LIKE @p0 AND ProductSubcategoryId = @p1, and will also hold the parameters @p0 = productName and @p1 = subCategoryId.

To sum, instead of using Dapper’s extension .Query<T> which extends IDbConnection and accepts a SQL string and a list of parameters, you use QueryBuilder() extension which creates a QueryBuilder where you can dynamically (and in a single statement) add new parameters and add the associated SQL clause.

Quickstart

If you liked and want to start using right now,

- Install the NuGet package Dapper-QueryBuilder

- Start using like this:

using DapperQueryBuilder;

// ...

cn = new SqlConnection(connectionString);

// If you have all your parameters at once and just want to benefit from string interpolation:

var products = cn.QueryBuilder($@"

SELECT ProductId, Name, ListPrice, Weight

FROM [Product]

WHERE [ListPrice] <= {maxPrice}

AND [Weight] <= {maxWeight}

AND [Name] LIKE {search}

ORDER BY ProductId").Query<Product>();

Or building dynamic conditions like this:

using DapperQueryBuilder;

// ...

cn = new SqlConnection(connectionString);

// If you want to dynamically add conditions

var q = cn.QueryBuilder($@"

SELECT ProductId, Name, ListPrice, Weight

FROM [Product]

WHERE 1=1 ");

q.AppendLine("AND [ListPrice] <= {maxPrice}");

q.AppendLine("AND [Weight] <= {maxWeight}");

q.AppendLine("AND [Name] LIKE {search}");

q.AppendLine("ORDER BY ProductId");

var products = q.Query<Product>();

Filters list

Some people believe incorrectly that WHERE 1=1 causes a performance hit. It doesn’t. So using this fake condition is a great trick so that you can just append additional conditions (if any) like shown in the example above.

Another option is that you can build your whole query but leave the filters to be dynamically defined (and rendered) later. DapperQueryBuilder has this special command Where() which is used to save the filters internally, and later they are replaced.

int maxPrice = 1000;

int maxWeight = 15;

string search = "%Mountain%";

var cn = new SqlConnection(connectionString);

// You can build the query manually and just use QueryBuilder to replace "where" filters (if any)

var q = cn.QueryBuilder(@"SELECT ProductId, Name, ListPrice, Weight

FROM [Product]

/**where**/

ORDER BY ProductId

");

// You just pass the parameters as if it was an interpolated string,

// and QueryBuilder will automatically convert them to Dapper parameters (injection-safe)

q.Where($"[ListPrice] <= {maxPrice}");

q.Where($"[Weight] <= {maxWeight}");

q.Where($"[Name] LIKE {search}");

// Query() will automatically build your query and replace your /**where**/ (if any filter was added)

var products = q.Query<Product>();

You can also create OR filters like this:

q.Where(new Filters(Filters.FiltersType.OR)

{

new Filter($"[Weight] <= {maxWeight}"),

new Filter($"[Name] LIKE {search}")

});

Or you can mix and match OR/AND like this:

q.Where(new Filters(Filters.FiltersType.OR)

{

new Filters(Filters.FiltersType.AND)

{

$"[Weight] <= {maxWeight}",

$"[Weight] >= {minWeight}",

}

new Filter($"[Name] LIKE {search}")

});

And even arrays can be used as interpolated strings:

var categories = new string[] { "Components", "Clothing", "Acessories" };

q.Append($"WHERE c.[Name] IN {categories}");

Fluent API (Chained-methods)

For those who like method-chaining guidance (or for those who allow end-users to build their own queries), there’s a Fluent API that allows you to build queries step-by-step mimicking dynamic SQL concatenation.

So, basically, instead of starting with a full query and just appending new filters (.Where()), the QueryBuilder will build the whole query for you:

var q = cn.QueryBuilder()

.Select($"ProductId")

.Select($"Name")

.Select($"ListPrice")

.Select($"Weight")

.From($"[Product]")

.Where($"[ListPrice] <= {maxPrice}")

.Where($"[Weight] <= {maxWeight}")

.Where($"[Name] LIKE {search}")

.OrderBy($"ProductId");

var products = q.Query<Product>();

You would get this query:

SELECT ProductId, Name, ListPrice, Weight

FROM [Product]

WHERE [ListPrice] <= @p0 AND [Weight] <= @p1 AND [Name] LIKE @p2

ORDER BY ProductId

Hope you enjoyed this post as much as I did when I wrote this library!

Full source code here.